The vitality and success of Open Source Software (OSS) projects depend on their ability to attract, absorb and retain new developers [1] that decide to commit some of their time to the project. In the last years, new code hosting platforms like GitHub have popped up with the goal of helping in the promotion and collaboration around OSS projects thanks to their integration of social following, team management and issue-tracking features around a pull-based model implementation.

Roughly speaking, GitHub enables a distributed development model based on Git (though with some extensions). In GitHub there are two main development strategies aimed at (1) the project team members and (2) external developers. Team members have direct access to the source code, which they modify by means of pushes. External developers follow a pull-based model, where any developer can work isolately with clones (facilitated by means of forks in GitHub) of the original source code. Later, developers can then send back their changes and request those changes to be integrated in the project codebase. This is what is called to send a pull request. Finally, pull requests are evaluated by project team members, who can either approve the pull request and incoporate the changes, or reject it and propose improvements which can be addressed by the proponent. Beyond the project creator, other developers can be promoted to the status of official project collaborators and get most of the same rights project owners have, so that they can help not only on the development (by means of pushes, as said above) but also with management tasks (e.g., answering issues or providing support to other developers). Issue-tracking support helps both external developers and team members to request new features and report bugs, and therefore fosters the participation in the development process. People interested in the project can also become watchers to follow the project evolution.

What makes some projects more successful than others?

Since there is still very limited understanding of why some projects advance faster than others, we asked ourselves whether projects using all these new collaboration features available in code hosting platforms like GitHub would actually have a positive influence in the advancement of the project. Are popular projects (i.e., projects with more watchers, more issues added, more people trying to become collaborators…) really more successful?

This blog reports on our answer to this question based on our findings after conducting a quantitative analysis considering all the GitHub projects created in the last two and a half years. As metric for project success we chose the number of commits (not necessarily adding code, also removing it). We believe this reflects better than other metrics the fact that the project is alive and improving. Several works have performed qualitative analysis of GitHub samples ([2, 3, 4] among others) but none trying to determine criteria for project success.

Methodology for our quantitative analysis

To perform our study we took all GitHub projects created after 2012 and collected a few relevant attributes for each of them.

GitHub Project Attributes

For each project we were interested in getting insights regarding the following characteristics:

- General information. We consider basic project information such as whether the project is a fork of another and the programming language used in its development.

- Development. We measure the development status of GitHub projects in terms of commits (totalCommits attribute) since its creation. As GitHub projects can receive commits from pushes (i.e., source code contributions coming from team members) and pull requests (i.e., source code contributions coming from accepted pull requests), we distinguish commitsPush and commitsPR attributes, respectively.

- Interest. Being a social coding site, GitHub projects can also be monitored, tracked and forked by users. We therefore focus on two main facilities provided by GitHub: watchers (watchers attribute) and forks (forks attribute). The former is the number of people interested in following the evolution of the project; they are notified when the project status changes (e.g., new releases, new issues, etc.). The latter is the number of people that made a fork. Both attributes can provide good insights on the project popularity [5].

- Collaborators. We consider the number of collaborators (attribute) who have joined a project to help in its development.

- Contributions. We focus on contributions coming from (1) pull requests (PRs attribute) and (2) issues (issues attribute). In particular, we are interested in collecting the number of pull requests and issues that have been proposed (i.e., opened) for each project.

Mining GitHub

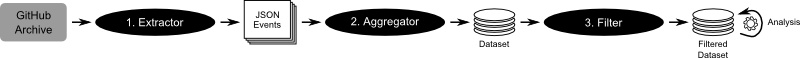

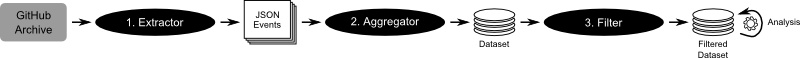

The mining process is illustrated in the following Figure and is composed of three phases: (1) extracting the data, (2) aggregating the data to calculate and import the attribute values for each project into a database, and (3) filtering the database to build the subset of projects used for analysis (see Filter). Next, we will describe each phase of the process.

Figure 1: Mining process.

Extractor. GitHub data has been obtained from GitHub Archive which has tracked every public event triggered by GitHub since February 2011. GitHub events describe individual actions performed on GitHub projects, for instance, the creation of a pull request or a push. Events are represented in JSON format. There are 22 types of events but we focus on 7 of them from which we can get the data needed to calculate the project attributes described before. The considered event types are presented in Table 1.

Table 1: Events considered in the GitHub Archive extractor.

| Event Type |

Triggering condition |

Attributes Involved |

| MemberEvent |

A user is added as a collaborator to a repository |

collabs |

| PushEvent |

A user performs a push |

commitsPush |

| WatchEvent |

A user stars a repository |

watchers |

| PullRequestEvent |

A pull request is created, closed, reopened or synchronized |

PRs, commitsPR |

| ForkEvent |

A user forks (i.e., clones) a repository |

forks |

| IssuesEvent |

An issue is created, closed or reopened |

issues |

Events are stored in GitHub Archive hourly. Our process collected all the events triggered per day since January 1st 2012 (starting date for our analyzed period).

Aggregator. This component aggregates the events extracted in the previous step and calculates the attributes for each project.

The resulting dataset contains 7,760,221 projects. This dataset was curated to eliminate projects with missing information or that were former private projects (which would prevent us from getting the full picture of the project). The curated dataset contained 7,365,622 projects.

Filter. This component allows building subsets of the previous dataset in order to perform a more focused analysis. The filter takes as input the dataset from the previous step and creates a new filtered dataset containing only those elements fulfilling a particular condition.

In the context of our study, we built a new filtered dataset including only those projects not being a fork of another and that explicitly mention they were repos with code in a given programming language. GitHub is used for many other tasks beyond software development (i.e., writing books) and we wanted to focus only on original software development projects. The resulting filtered dataset contained 2,126,093 projects and was the one used in all the other analysis presented in this blog post.

First of all, are projects in GitHub really using collaboration features?

Before we try to answer the question of whether using those features help in the project advancement, we should check whehter these features are used at all. To answer this question, we will characterize GitHub projects according to the attributes presented before and specifically study the use of collaboration facilities in them. Table 2 reveals that in fact they are not largely used.

Table 2: Project attributes results of the GitHub dataset.

| Development attributes |

| Attribute |

Min. |

Q1 |

Median |

Mean |

Q3 |

Max. |

| totalCommits |

0.00 |

2.00 |

7.00 |

43.00 |

19.00 |

5545441.00 |

| commitsPush |

0.00 |

2.00 |

7.00 |

41.00 |

19.00 |

5545441.00 |

| commitsPR |

0.00 |

0.00 |

0.00 |

1.31 |

0.00 |

38242.00 |

| Interest attributes |

| Attribute |

Min. |

Q1 |

Median |

Mean |

Q3 |

Max. |

| watchers |

0.00 |

0.00 |

0.00 |

2.26 |

1.00 |

14607.00 |

| forks |

0.00 |

0.00 |

0.00 |

0.68 |

0.00 |

2913.00 |

| Collaborators and Contribution attributes |

| Attribute |

Min. |

Q1 |

Median |

Mean |

Q3 |

Max. |

| collabs |

0.00 |

0.00 |

0.00 |

0.05 |

0.00 |

7.00 |

| PRs |

0.00 |

0.00 |

0.00 |

0.96 |

0.00 |

8337.00 |

| issues |

0.00 |

0.00 |

0.00 |

0.29 |

0.00 |

1540.00 |

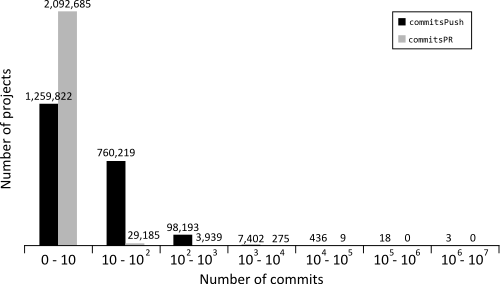

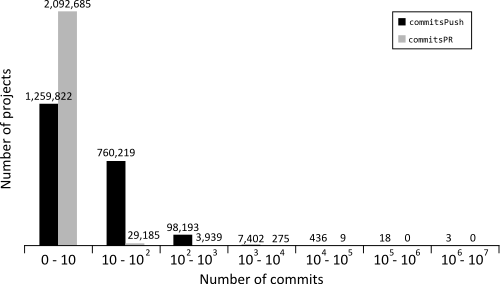

The results for development attributes such as totalCommits are strongly influenced by the fact that a considerable number of projects have a small number of commits. Thus, 1,259,822 (59.26% of the total number of projects) have between 0-10 commits from pushes (commitsPush) and 2,092,685 (98.47% of the total number of projects) have only between 0-10 commits from pull requests (commitsPR). Figure 2 illustrates this situation by showing the number of projects (vertical axis) per group of commits (horizontal axis).

Figure 2: Comparison between number of projects and number of commits coming from pull requests (commitsPR) and pushes (commitsPush).

Regarding the interest attributes, 1,433,042 projects (67.40% of the total number of projects) have 0 watchers and 1,614,556 projects (75.94% of the total number of projects) have never been forked. These results suggest that the use of GitHub is far from what it would be expected as a social coding site.

The results for collaborator and contribution attributes also reveal a very poor usage. Thus, 2,017,911 projects (94.91% of the total number of projects) do not use the collaborator figure; 1,953,977 projects (91.90% of the total number of projects) have never received a pull request; and 1,949,644 projects (91.70% of the total number of projects) have never received an issue.

Therefore we can conclude that most projects do not make any use of GitHub features and use it purely as a kind of backup mechanism. The great majority of projects show a low activity (i.e., totalCommits, commitsPush and commitsPR) and attract low interest (i.e., forks and watchers).

but those that do, do they get any benefits?

If so, this would be a good reason for the other projects to follow suit. Let’s see then if popular projects that attract a lot of interset (plenty of forks and watchers) and manage to involve a large community (that opens issues, becomes collaborators, submits pull requests) end up having more commits in the repository than others.

To answer this question, we will perform a correlation analysis among the involved attributes. More specifically, we resort to the Spearman’s rho (ρ) correlation coefficient to confirm the existence of a correlation. This coefficient is used in statistics as a non-parametric measure of statistical dependence between two variables. The values of ρ are in the range [-1, +1], where a perfect correlation is represented either by a -1 or a +1, meaning that the variables are perfectly monotonically related (either increasing or decreasing relationship, respectively). Thus, the closer to 0 the ρ is, the more independent the variables are.

Table 3 shows the ρ values for each combination of attributes we wanted to evaluate. The first three rows focus on the correlation between the number of collaborators, pull requests and issues and the number of commits of the project. As you can see there is no correlation (except for the somewhat obvious correlation between the number of pull requests and the commits derived from accepting them, as long as they are accepted, but with basically no impact on the global number of commits) among them. The last rows show there is no correlation either between the number of people following the project and the commits.

Table 3: Correlation analysis between the considered attributes.

|

Success attributes |

|

totalCommits |

commitsPush |

commitsPR |

| collabs |

0.09 |

0.09 |

0.06 |

| PRs |

0.27 |

0.25 |

0.88 |

| issues |

0.25 |

0.25 |

0.34 |

| watchers |

0.11 |

0.10 |

0.24 |

| forks |

0.08 |

0.07 |

0.36 |

It is important to note that during our study we also calculated the correlation values among all these attributes when grouping the projects according several dimensions, specially based on their size and the language used. None of those groupings revealed different results from those shown above.

Threats to Validity

In this section we describe the threats to validity we have identified in our study.

External Validity. Our study considers a large dataset of GitHub projects, however, it may not represent the universe of all real-world projects. In particular, as GitHub allows users to create open source repositories without any expense, our dataset might include mock or personal projects that are not focused on attracting contributions and they have been open sourced only to avoid paying membership fees to keep them private.

Internal Validity. Our study only considers GitHub data and therefore does not take into account external tools used by some GitHub projects (e.g. to manage the team and issues; for instance people attaching patches to an external Bugzilla bug tracking tool, later manually merged into the project by the project owner) that can lead to bias our study (i.e., in the previous example, that patch would not count as a pull request). Finally, using the language attribute to filter out non-software projects may result in the elimination of relevant projects since some software projects do not set the programming language used.

If popularity is not a good indicator, what determines the success of a project?

Honestly, we think by now it’s clear that we have no idea. We have learnt about quite a few things that do not correlate with success but still have to find one that does. Probably because there is no single reason for that or at least not one that it is simple enough to be easily measured. Still, being able to shed some light on this issue, even if partial, would be very benefitial for the OSS community and thus it’s worth to keep trying.

To try to get some more insights on this we have complemented this quantitative analysis with a more qualitative one where we conducted a manual inspection of the 50 most successful GitHub projects in our dataset (success measured in terms of the number of commits of the project coming from pull requests, i.e., from external contributors). We noticed that 92% of them (i.e., 46 projects) included a description file (i.e. readme), with, often, a link to complementary information in wikis (46%) and/or external websites (50%). A further manual inspection of these three kinds of project information sources revealed that they were not purely “decorative” but that instead included precise information on the process to follow for all those willing to contribute to the project (e.g., how to submit a pull request, the decision process followed to accept a pull request or an issue, etc.). We have compared these numbers with random samples of projects to confirm they are not just average values for the GitHub population.

This hints at the reasonable possibility that having a clear description of the contribution process is a significant factor to attract new contributions. Unfortunately, existing GitHub APIs and services do not provide direct support to automatically check our hypothesis on the whole population of GitHub projects so further research on this requires conducting other kinds of empirical analysis like interviews to contributors and project managers. If this is confirmed, this would open plenty of other interesting questions like whether some kinds of contribution processes (also known as governance rules) attract more contributors than others (e.g. dictatorship approach versus a more open process to accept pull requests). This could help project owners to decide whether to have a more transparent governance process in order to advance faster in the project development. See [6] for a deeper discussion on this.

About the Authors

Javier Luis Cánovas Izquierdo is a postdoctoral fellow at IN3, UOC, Barcelona, Spain.

Valerio Cosentino is a postdoctoral fellow at EMN, Nantes, France.

Jordi Cabot is an ICREA Research Professor at IN3, UOC, Barcelona Spain.

References

[1] C. Bird, A. Gourley, P. Devanbu, U. C. Davis, A. Swaminathan, and G. Hsu. Open Borders ? Immigration in Open Source Projects. In MSR conf., 2007.

[2] J. Choi, J. Moon, J. Hahn, and J. Kim. Herding in open source software development: an exploratory study. In CSCW conf., pages 129–133, 2013.

[3] G. Gousios, M. Pinzger, and A. V. Deursen. An Exploratory Study of the Pull-based Software Development Model. In ICSE conf., 345–355, 2014.

[4] F. Thung, T. F. Bissyande, D. Lo, and L. Jiang. Network Structure of Social Coding in GitHub. In CSMR conf., pages 323–326, 2013.

[5] T. F. Bissyande, D. Lo, L. Jiang, L. Reveillere, J. Klein, and Y. L. Traon. Got issues? Who cares about it? A large scale investigation of issue trackers from GitHub. ISSRE symp., pages 188–197, 2013.

[6] J. Cánovas, J. Cabot. Enabling the Definition and Enforcement of Governance Rules in Open Source Systems. In ICSE – Software Engineering in Society (ICSE-SEIS), to appear.